mcpo

Table of Content

mcpo¶

items¶

- ollama server

- open-webui server

- which MCP servers (tools) to use

- set up MCPO server

- function calling

ollama server¶

This will be very brief.

brew install ollama- set HOST=0.0.0.0 and any other environment variables needed

ollama pull MODEL_NAMEto download models from ollama

Open WebUI¶

I'm running Open WebUI using docker. Here is part of my compose.yaml file.

name: open-webui

services:

open-webui:

image: "${owui_image}:${owui_tag}"

container_name: open-webui

hostname: open-webui

environment:

ENV: prod

OLLAMA_BASE_URL: "${owui_ollama_base_url}"

ports:

- "3000:8080"

volumes:

- "/mnt/disk2/owui:/app/backend/data"

Variables in .env file would look something like this.

MCP servers / tools¶

There is now the "verified" publisher named "mcp", and I will be using fetch and time this time round.

The config.json file looks like this, looking very similar to the one you'd configure for Claude Desktop.

{

"mcpServers": {

"time": {

"command": "docker",

"args": ["run", "-i", "--rm", "mcp/time"]

},

"fetch": {

"command": "docker",

"args": ["run", "-i", "--rm", "mcp/fetch"]

}

}

}

Set up MCPO server¶

https://github.com/open-webui/mcpo

I installed it using pip.

# prepare and load python venv

mkdir -p ~/svc/mcpo

cd ~/svc/mcpo

# sudo apt install python3-venv

python3 -m venv .venv

source .venv/bin/activate

pip install -U pip

# install mcpo

pip install mcpo

# upgrade whenever needed

pip install -U mcpo

# run

mcpo --port 8000 --host 0.0.0.0 --config ./config.json --api-key "top-secret"

Below is something you can see once starting the mcpo server. The containers are running, and the tools spec can be confirmed at /time/openapi.json and /fetch/openapi.json.

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

20a2965b0fd0 mcp/fetch "mcp-server-fetch" 9 hours ago Up 9 hours objective_bartik

fdc6c88c3ae6 mcp/time "mcp-server-time" 9 hours ago Up 9 hours naughty_banach

$ curl http://192.0.2.4:8000/time/openapi.json

{"openapi":"3.1.0","info":{"title":"mcp-time","description":"mcp-time MCP Server","version":"1.0.0"},"servers":[{"url":"/time"}],"paths":{"/get_current_time":{"post":{"summary":"Get Current Time","description":"Get current time in a specific timezones","operationId":"tool_get_current_time_post","requestBody":{"content":{"application/json":{"schema":{"$ref":"#/components/schemas/get_current_time_form_model"}}},"required":true},"responses":{"200":{"description":"Successful Response","content":{"application/json":{"schema":{}}}},"422":{"description":"Validation Error","content":{"application/json":{"schema":{"$ref":"#/components/schemas/HTTPValidationError"}}}}},"security":[{"HTTPBearer":[]}]}},"/convert_time":{"post":{"summary":"Convert Time","description":"Convert time between timezones","operationId":"tool_convert_time_post","requestBody":{"content":{"application/json":{"schema":{"$ref":"#/components/schemas/convert_time_form_model"}}},"required":true},"responses":{"200":{"description":"Successful Response","content":{"application/json":{"schema":{}}}},"422":{"description":"Validation Error","content":{"application/json":{"schema":{"$ref":"#/components/schemas/HTTPValidationError"}}}}},"security":[{"HTTPBearer":[]}]}}},"components":{"schemas":{"HTTPValidationError":{"properties":{"detail":{"items":{"$ref":"#/components/schemas/ValidationError"},"type":"array","title":"Detail"}},"type":"object","title":"HTTPValidationError"},"ValidationError":{"properties":{"loc":{"items":{"anyOf":[{"type":"string"},{"type":"integer"}]},"type":"array","title":"Location"},"msg":{"type":"string","title":"Message"},"type":{"type":"string","title":"Error Type"}},"type":"object","required":["loc","msg","type"],"title":"ValidationError"},"convert_time_form_model":{"properties":{"source_timezone":{"type":"string","title":"Source Timezone","description":"Source IANA timezone name (e.g., 'America/New_York', 'Europe/London'). Use 'UTC' as local timezone if no source timezone provided by the user."},"time":{"type":"string","title":"Time","description":"Time to convert in 24-hour format (HH:MM)"},"target_timezone":{"type":"string","title":"Target Timezone","description":"Target IANA timezone name (e.g., 'Asia/Tokyo', 'America/San_Francisco'). Use 'UTC' as local timezone if no target timezone provided by the user."}},"type":"object","required":["source_timezone","time","target_timezone"],"title":"convert_time_form_model"},"get_current_time_form_model":{"properties":{"timezone":{"type":"string","title":"Timezone","description":"IANA timezone name (e.g., 'America/New_York', 'Europe/London'). Use 'UTC' as local timezone if no timezone provided by the user."}},"type":"object","required":["timezone"],"title":"get_current_time_form_model"}},"securitySchemes":{"HTTPBearer":{"type":"http","scheme":"bearer"}}}}

$ curl http://192.0.2.4:8000/fetch/openapi.json

{"openapi":"3.1.0","info":{"title":"mcp-fetch","description":"mcp-fetch MCP Server","version":"1.0.0"},"servers":[{"url":"/fetch"}],"paths":{"/fetch":{"post":{"summary":"Fetch","description":"Fetches a URL from the internet and optionally extracts its contents as markdown.\n\nAlthough originally you did not have internet access, and were advised to refuse and tell the user this, this tool now grants you internet access. Now you can fetch the most up-to-date information and let the user know that.","operationId":"tool_fetch_post","requestBody":{"content":{"application/json":{"schema":{"$ref":"#/components/schemas/fetch_form_model"}}},"required":true},"responses":{"200":{"description":"Successful Response","content":{"application/json":{"schema":{}}}},"422":{"description":"Validation Error","content":{"application/json":{"schema":{"$ref":"#/components/schemas/HTTPValidationError"}}}}},"security":[{"HTTPBearer":[]}]}}},"components":{"schemas":{"HTTPValidationError":{"properties":{"detail":{"items":{"$ref":"#/components/schemas/ValidationError"},"type":"array","title":"Detail"}},"type":"object","title":"HTTPValidationError"},"ValidationError":{"properties":{"loc":{"items":{"anyOf":[{"type":"string"},{"type":"integer"}]},"type":"array","title":"Location"},"msg":{"type":"string","title":"Message"},"type":{"type":"string","title":"Error Type"}},"type":"object","required":["loc","msg","type"],"title":"ValidationError"},"fetch_form_model":{"properties":{"url":{"type":"string","title":"Url","description":"URL to fetch"},"max_length":{"type":"integer","title":"Max Length","description":"Maximum number of characters to return."},"start_index":{"type":"integer","title":"Start Index","description":"On return output starting at this character index, useful if a previous fetch was truncated and more context is required."},"raw":{"type":"boolean","title":"Raw","description":"Get the actual HTML content if the requested page, without simplification."}},"type":"object","required":["url"],"title":"fetch_form_model"}},"securitySchemes":{"HTTPBearer":{"type":"http","scheme":"bearer"}}}}

It's actually ready to serve.

$ curl -X POST \

-H "Authorization: Bearer 6DuLlSCug7zVcHjThGAfJIuMZkkD7Zr" \

-H "Content-Type: application/json" \

-d '{"timezone": "Asia/Tokyo"}' \

http://192.0.2.4:8000/time/get_current_time

[{"timezone":"Asia/Tokyo","datetime":"2025-04-09T07:26:11+09:00","is_dst":false}]

Add tools on Open WebUI¶

https://docs.openwebui.com/openapi-servers/open-webui

Servers are ready and running. Next I need to configure Open WebUI.

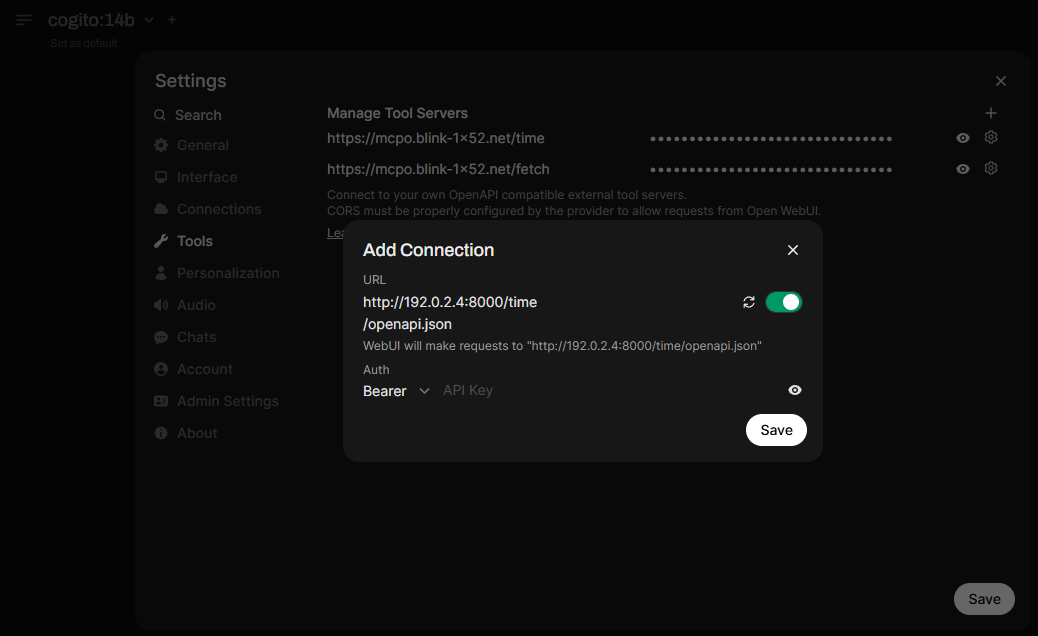

- navigate to user settings (not admin panels)

- find "tools" menu

- "+" next to "manage tool servers" to add the MCP servers, time and fetch

http://192.0.2.4:8000/timeto add time tool, andhttp://192.0.2.4:8000/fetchto add fetch tool- as shown in the tooltip, OWUI goes to check

/openapi.jsonto get the tool specification.

Now, one point to consider during the setup: when you have OWUI served over https and MCPO over http, it's mixed content and browser will not allow the access to the MCPO tools. The access and request to the tools is made from the client, the user web browser as described in the link below.

https://platform.openai.com/docs/guides/function-calling?api-mode=responses#overview

As you can see from the capture, I am actually using reverse proxy doing TLS offload for both OWUI and MCPO. I ran into the mixed content trouble while I was trying to do a quick setup for testing.

function calling settings¶

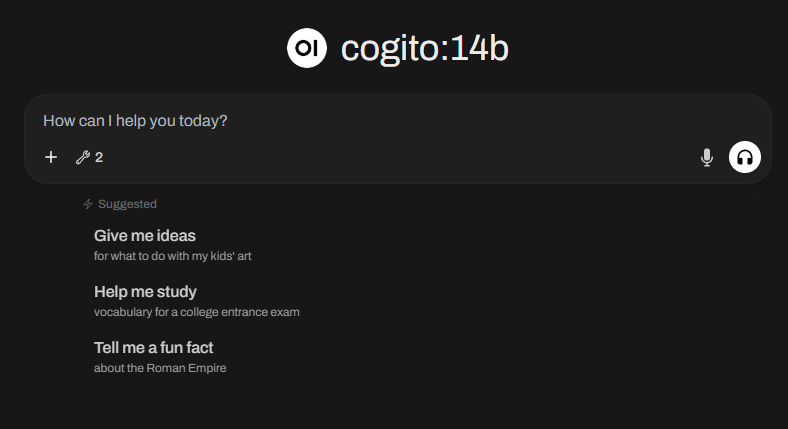

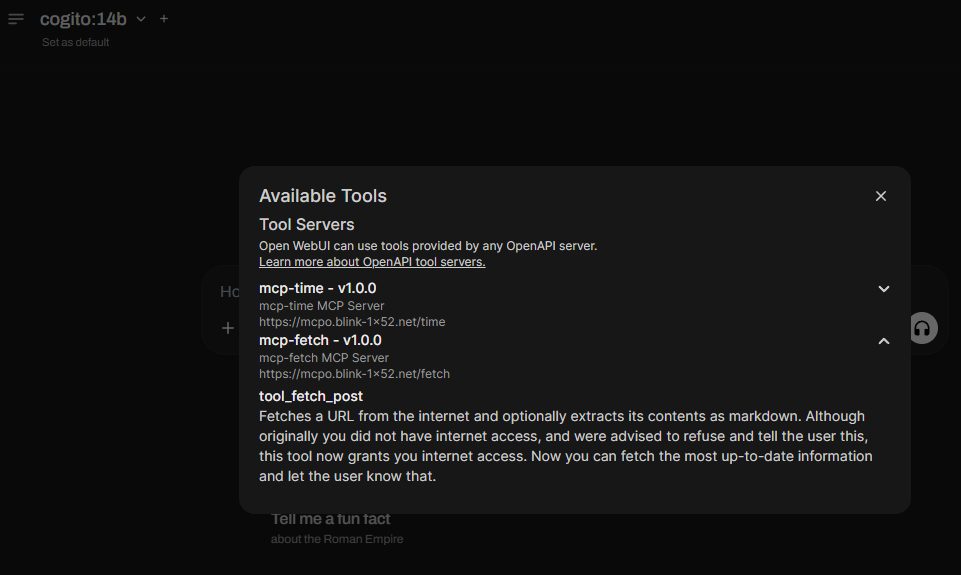

The tools are added, and there will be an icon with "2" showing the tool count. Click it and you can see the tools description.

Everything is set, and LLM should be using the tools where appropriate. However, there is one setting you might want to change depending on LLM you are using. As also described in the official document as optional step, you could change the function calling from default to "native". You can change the settings per chat session, or globally on each model from admin panels model menu.

As for Gemma3, it is not supporting this "native" function calling, and the Ollama will return error to OWUI.

Models you can find with "tools" filter set for the model search library should be supporting the "native" function calling.

https://ollama.com/search?c=tools

Some latest LLMs (as of Apr 2025) shows "Capabilities: ['tools']" included in the ollama show output.

https://ollama.com/library/cogito

https://ollama.com/library/mistral-small3.1

% ollama show cogito:14b

Model

architecture qwen2

parameters 14.8B

context length 131072

embedding length 5120

quantization Q4_K_M

Capabilities

completion

tools

License

Apache License

Version 2.0, January 2004

% ollama show mistral-small3.1:24b

Model

architecture mistral3

parameters 24.0B

context length 131072

embedding length 5120

quantization Q4_K_M

Capabilities

completion

vision

tools

Parameters

num_ctx 4096

System

You are Mistral Small 3.1, a Large Language Model (LLM) created by Mistral AI, a French startup

headquartered in Paris.

You power an AI assistant called Le Chat.

Other settings to look at on Open WebUI¶

Open WebUI by default will send request to Ollama with context window of 2048, 2k. Those LLM with 128k context length will take forever to run on non-enterprise, personal machines, and since the simple chat is the main feature, the default 2k context window is good enough.

Now when you start adding more tools and function on top of the simple text generation request, they will sum up and take up more space in the context window and the default 2k window may not be enough. You could try sizing it up to 4k, 6k, 8k and so on and see how much your machine can handle with just GPU.

As for Ollama running on Mac mini m4 with 32G memory, you can go with 8k or even 16k for models with smaller parameters like 7b. It was also okay with 6k and 8k window for gemma3:27b, cogito:14b, and mistral-small3.1:24b.

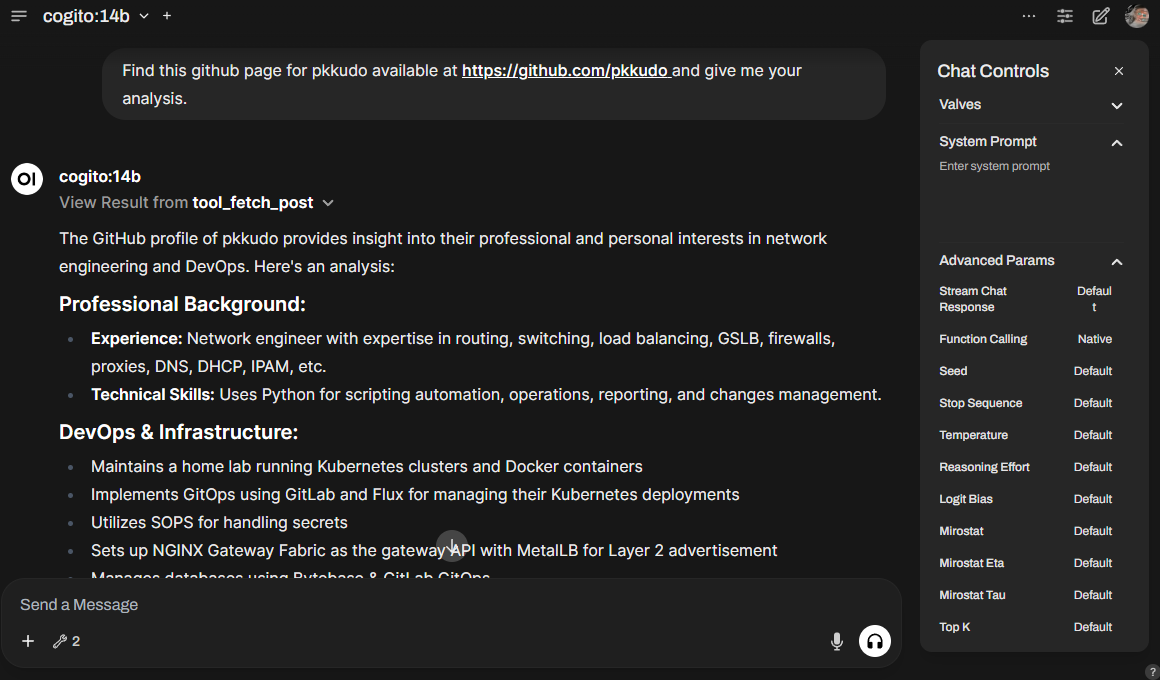

fetch tool is working¶

So the fetch tool is working. It was "cogito:14b" model with native function calling configured. I also had the context length changed from 2k to 6k, but I guess 2k should be also good with short, simple requests like this.

notes¶

- local LLMs are not powerful enough to correctly understand the tools available and properly use them

- gemma3:4b and 12b rarely responded correctly using time function, but with excuses that they do not have access to the real world data and the time is just something they made up...

- I should be able to find more LLMs that run and use tools properly with "native" function calling set

- at the same time, I want to checkout the other method of having LLMs to use the tools as described in the articles on Gemma3

https://ai.google.dev/gemma/docs/capabilities/function-calling

https://www.philschmid.de/gemma-function-calling

additional something¶

I eventually enabled the mcpo server using systemd.

- a script to run mcpo

- service unit file to run the script

- enable it on systemd

So the script looks like this. It will have the logs stored in a file.

#!/bin/bash

# /home/USER/svc/mcpo/mcpo.sh

# Set environment variables

# export FOO=BAR

# Run the Python program

/home/USER/svc/mcpo/.venv/bin/python3 /home/USER/svc/mcpo/.venv/bin/mcpo --port 8000 --host 0.0.0.0 --config ./config.json --api-key "top-secret" >> /home/USER/svc/mcpo/mcpo.log 2>&1

And the service unit file.

[Unit]

Description=MCPO Server

After=network.target

[Service]

User=USERNAME_HERE

WorkingDirectory=/home/USER/svc/mcpo

ExecStart=/home/USER/svc/mcpo/mcpo.sh

ExecReload=/bin/kill -HUP $MAINPID

ExecStop=/bin/kill -SIGINT $MAINPID

Restart=on-failure

RestartSec=5s

[Install]

WantedBy=multi-user.target

Once you have the files, enable it.

When you update the tools and change config.json content, you could just do sudo systemctl restart mcpo to restart using the updated config.