building homelab cluster part 1

Table of Content

building homelab cluster part 1¶

I have been rebuilding my homelab kubernetes cluster from time to time. This series will be my record of rebuilding kubernetes cluster using baremetal machines.

nodes¶

I have a cluster of 5 nodes, 2 of them as control plane.

I have two more machines that I use to run docker containers. They run reverse proxy, MFA, GitLab, Harbor, and so on.

The last one, raspberry pi 4, is the ansible master to run maintenance playbooks.

| hostname | role | os | arch | core | memory | disk | additional disk |

|---|---|---|---|---|---|---|---|

| rpi4bp | control plane | debian 12.5 | arm64 | 4 | 4Gi | 64GB | n/a |

| livaz2 | control plane | debian 12.5 | amd64 | 4 | 16Gi | 128GB | 6000GB, 5000Mbps |

| livaq2 | worker node | debian 12.5 | amd64 | 4 | 4Gi | 64GB | n/a |

| ak3v | worker node | debian 12.5 | amd64 | 2 | 8Gi | 128GB | 500GB, 5000Mbps |

| nb5 | worker node | debian 12.5 | amd64 | 4 | 8Gi | 128GB | 500GB, 5000Mbps |

| gk41 | docker, non-k8s | debian 12.5 | amd64 | 4 | 8Gi | 128GB | n/a |

| th80 | docker, non-k8s | debian 11.9 | amd64 | 16 | 16Gi | 512GB | 500GB, 480Mbps |

| rpi4 | ansible master, non-k8s | debian 11.9 | arm64 | 4 | 4Gi | 32GB | n/a |

k8s-ready nodes¶

I am skipping the part to build the cluster.

It's just about following the instruction on kubernetes official document, and I've also posted a blog post in Japanese here - Kubernetesクラスタ構築 - おうちでGitOpsシリーズ投稿2/6, however, I am planning to add a link to separate page or public repository to share the actual ansible playbooks used to configure nodes to be k8s-ready.

containerd and pause¶

One thing to add note here is about the pause image. Once you have installed kubeadm on the control plane node, you can run below to find the list of images used by kubernetes. The "pause" image is something containerd also uses and configured in its configuration file. Set the same tag (version) in the containerd config file as what's shown in the kubeadm output.

registry.k8s.io/kube-apiserver:v1.30.2

registry.k8s.io/kube-controller-manager:v1.30.2

registry.k8s.io/kube-scheduler:v1.30.2

registry.k8s.io/kube-proxy:v1.30.2

registry.k8s.io/coredns/coredns:v1.11.1

registry.k8s.io/pause:3.9

registry.k8s.io/etcd:3.5.12-0

apply network addon to the cluster¶

And then I manually applied calico installation manifests to make the cluster ready for service.

https://github.com/projectcalico/calico

# confirm the latest version on cli

curl -sfL "api.github.com/repos/projectcalico/calico/releases/latest" | grep "tag_name"

# download v3.27.0

curl -LO https://raw.githubusercontent.com/projectcalico/calico/v3.27.0/manifests/tigera-operator.yaml

curl -LO https://raw.githubusercontent.com/projectcalico/calico/v3.27.0/manifests/custom-resources.yaml

# modify custom-resources.yaml

# and then apply

kubectl create -f tigera-operator.yaml

kubectl create -f custom-resources.yaml

# watch and wait for everything to be ready

watch kubectl get pods -n calico-system -o wide

# allow control plane to work

kubectl taint nodes --all node-role.kubernetes.io/control-plane-

Here is my calico custom-resources.yaml file. I just changed the cidr.

# This section includes base Calico installation configuration.

# For more information, see: https://docs.tigera.io/calico/latest/reference/installation/api#operator.tigera.io/v1.Installation

apiVersion: operator.tigera.io/v1

kind: Installation

metadata:

name: default

spec:

# Configures Calico networking.

calicoNetwork:

# Note: The ipPools section cannot be modified post-install.

ipPools:

- blockSize: 26

cidr: 10.244.0.0/16

encapsulation: VXLANCrossSubnet

natOutgoing: Enabled

nodeSelector: all()

---

# This section configures the Calico API server.

# For more information, see: https://docs.tigera.io/calico/latest/reference/installation/api#operator.tigera.io/v1.APIServer

apiVersion: operator.tigera.io/v1

kind: APIServer

metadata:

name: default

spec: {}

And so here it is.

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

ak3v Ready <none> 36m v1.29.2 192.168.1.57 <none> Debian GNU/Linux 12 (bookworm) 6.1.0-18-amd64 containerd://1.7.13

livaq2 Ready <none> 16m v1.29.2 192.168.1.56 <none> Debian GNU/Linux 12 (bookworm) 6.1.0-18-amd64 containerd://1.7.13

livaz2 Ready control-plane 2d2h v1.29.2 192.168.1.52 <none> Debian GNU/Linux 12 (bookworm) 6.1.0-18-amd64 containerd://1.7.13

nb5 Ready <none> 103m v1.29.2 192.168.1.60 <none> Debian GNU/Linux 12 (bookworm) 6.1.0-18-amd64 containerd://1.7.13

rpi4bp Ready control-plane 2d3h v1.29.2 192.168.1.132 <none> Debian GNU/Linux 12 (bookworm) 6.1.0-rpi8-rpi-v8 containerd://1.7.13

installing calicoctl¶

https://docs.tigera.io/calico/latest/operations/calicoctl/install

curl -L https://github.com/projectcalico/calico/releases/download/v3.27.3/calicoctl-linux-amd64 -o kubectl-calico

chmod +x kubectl-calico

sudo mv kubectl-calico /usr/local/bin/.

upgrading calico¶

Get the operator manifest and run kubectl replace -f {manifest}.

For example)

curl https://raw.githubusercontent.com/projectcalico/calico/v3.27.3/manifests/tigera-operator.yaml -O

kubectl replace -f tigera-operator.yaml

gitops - install and bootstrap flux¶

Here is the page I prepared apart from this lab building effort - flux (edit: link removed)

Required permissions

To bootstrap Flux, the person running the command must have cluster admin rights for the target Kubernetes cluster. It is also required that the person running the command to be the owner of the GitLab project, or to have admin rights of a GitLab group.

And here is what I actually do this time. Nothing special.

- create a new repository

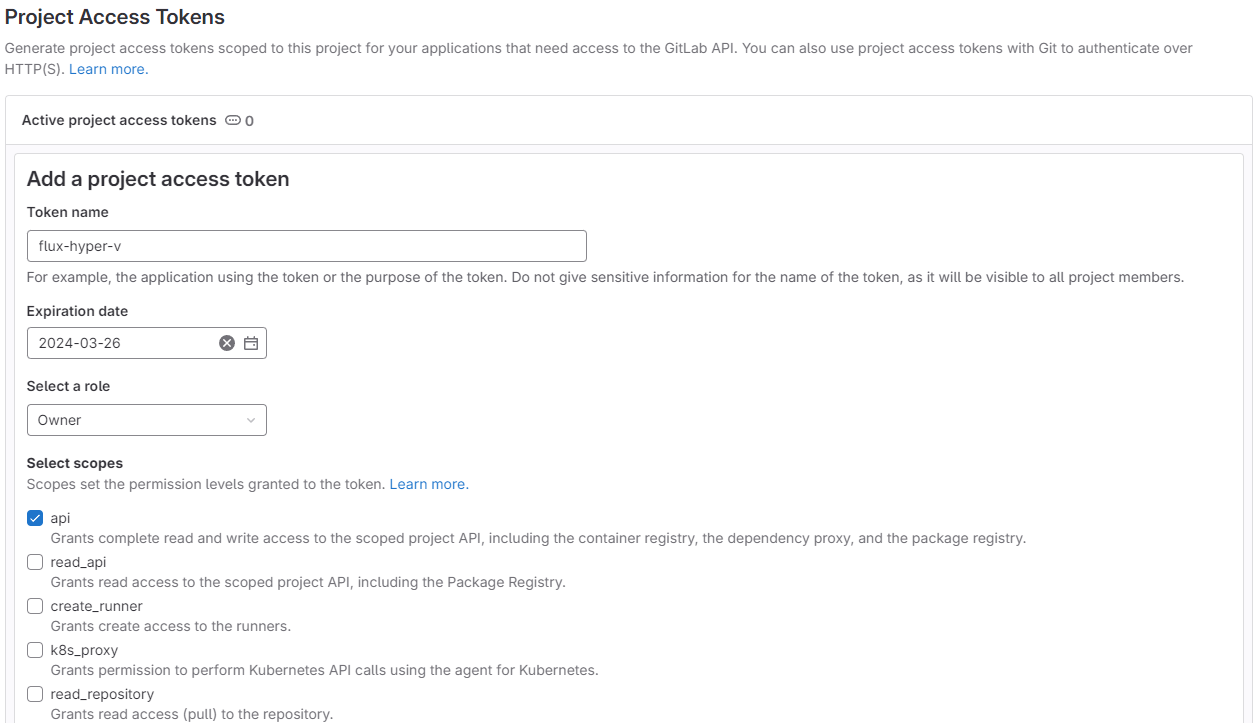

gitops/homelabon my self-managed GitLab - create an access token with owner role and api access

- install flux on whichever client machine with kubeconfig, management access to the control plane

- bootstrap

creating project access token for flux bootstrap¶

install flux¶

https://fluxcd.io/flux/installation/

https://github.com/fluxcd/flux2

bootstrap¶

Specify the cluster domain name especially if the name is changed from the default cluster.local.

export GITLAB_TOKEN={token_string_here}

export GITLAB_SERVER={gitlab_server}

flux bootstrap gitlab \

--deploy-token-auth \

--hostname="$GITLAB_SERVER" \

--owner=gitops \

--repository=homelab \

--path=./clusters/homelab \

--branch=main \

--cluster-domain=cluster.local

Here is the log, not for the baremetals but for the hyper-v VM cluster.

$ flux bootstrap gitlab \

--deploy-token-auth \

--hostname="$GITLAB_SERVER" \

--owner=gitops \

--repository=homelab \

--path=./clusters/hyper-v \

--branch=main

► connecting to https://cp.blink-1x52.net

► cloning branch "main" from Git repository "https://cp.blink-1x52.net/gitops/homelab.git"

✔ cloned repository

► generating component manifests

✔ generated component manifests

✔ committed component manifests to "main" ("0759d8f2ea8a74943868e932ebdd1d707b7c0c40")

► pushing component manifests to "https://cp.blink-1x52.net/gitops/homelab.git"

► installing components in "flux-system" namespace

✔ installed components

✔ reconciled components

► checking to reconcile deploy token for source secret

✔ configured deploy token "flux-system-main-flux-system-./clusters/hyper-v" for "https://cp.blink-1x52.net/gitops/homelab"

► determining if source secret "flux-system/flux-system" exists

► generating source secret

► applying source secret "flux-system/flux-system"

✔ reconciled source secret

► generating sync manifests

✔ generated sync manifests

✔ committed sync manifests to "main" ("3ec779843915ffad20a2dc220f5a4e65d5bdb2fa")

► pushing sync manifests to "https://cp.blink-1x52.net/gitops/homelab.git"

► applying sync manifests

✔ reconciled sync configuration

◎ waiting for GitRepository "flux-system/flux-system" to be reconciled

✔ GitRepository reconciled successfully

◎ waiting for Kustomization "flux-system/flux-system" to be reconciled

✔ Kustomization reconciled successfully

► confirming components are healthy

✔ helm-controller: deployment ready

✔ kustomize-controller: deployment ready

✔ notification-controller: deployment ready

✔ source-controller: deployment ready

✔ all components are healthy

$ flux get all

NAME REVISION SUSPENDED READY MESSAGE

gitrepository/flux-system main@sha1:3ec77984 False True stored artifact for revision 'main@sha1:3ec77984'

NAME REVISION SUSPENDED READY MESSAGE

kustomization/flux-system main@sha1:3ec77984 False True Applied revision: main@sha1:3ec77984

$ flux version

flux: v2.2.2

distribution: flux-v2.2.2

helm-controller: v0.37.2

kustomize-controller: v1.2.1

notification-controller: v1.2.3

source-controller: v1.2.3

gitops repository structure¶

https://fluxcd.io/flux/guides/repository-structure/

https://github.com/fluxcd/flux2-kustomize-helm-example

I will try to follow the guide as much as possible.

sops¶

https://fluxcd.io/flux/guides/mozilla-sops/

I will make gitops/homelab-sops the repository to store encrypted secret.

First, I will generate a keypair, put it in the flux-system namespace as secret, and upload public key to the repository.

# install sops and gpg if not installed

# create gitops/homelab-sops repository on gitlab if not created yet

# set key name and comment

export KEY_NAME="homelab.blink-1x52.net"

export KEY_COMMENT="homelab flux secret"

# generate the keypair

gpg --batch --full-generate-key <<EOF

%no-protection

Key-Type: 1

Key-Length: 4096

Subkey-Type: 1

Subkey-Length: 4096

Expire-Date: 0

Name-Comment: ${KEY_COMMENT}

Name-Real: ${KEY_NAME}

EOF

# find the fingerprint of the generated keypair

# and export

gpg --list-secret-keys "${KEY_NAME}"

export KEY_FP={fingerprint of the generated keypair}

# create the secret using the keypair in the flux-system namespace

gpg --export-secret-keys --armor "${KEY_FP}" |

kubectl create secret generic sops-gpg \

--namespace=flux-system \

--from-file=sops.asc=/dev/stdin

# confirm that the secret is there

kubectl get secret -n flux-system sops-gpg

# clone and go to the homelab-sops project directory

git clone https://cp.blink-1x52.net/gitops/homelab-sops.git

cd homelab-sops

# create the directory for hyper-v cluster and place public key there

mkdir -p clusters/homelab

gpg --export --armor "${KEY_FP}" > ./clusters/homelab/.sops.pub.asc

# commit and push so that going forward other member or yourself on other machine can import it to encrypt and place secret manifests in this repository

# to import...

# gpg --import ./clusters/homelab/.sops.pub.asc

# delete the keypair from the local machine

gpg --delete-secret-keys "${KEY_FP}"

# configure encryption

cat <<EOF > ./clusters/hyper-v/.sops.yaml

creation_rules:

- path_regex: .*.yaml

encrypted_regex: ^(data|stringData)$

pgp: ${KEY_FP}

EOF

And next, I will set additional git source for flux to watch on the original gitops/homelab repository.

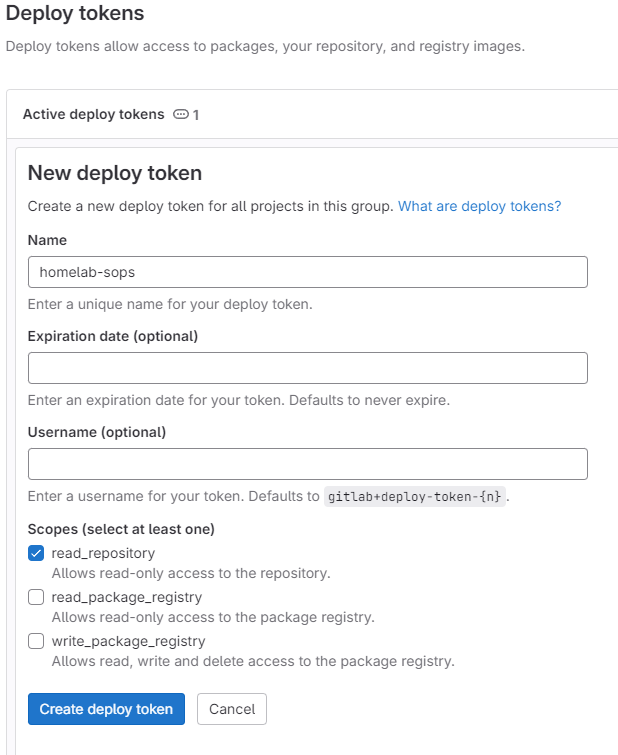

Unless the gitops/homelab-sops is a public repository, flux needs something to be authorized to read the repository. Let's create a token with read access for flux.

Navigate to the gitops/homelab-sops repository, settings > repository > deploy tokens, and click add token to create one. I'd name it "homelab-sops" and set "read_repository" scope to this deploy token.

# create a secret from the deploy token to read the sops repository

flux create secret git deploy-token-homelab-sops \

--namespace=flux-system \

--username={deploy token name here} \

--password={deploy token string here} \

--url=https://cp.blink-1x52.net/gitops/homelab-sops.git

This probably is the last secret to manually push to the kubernetes cluster, since the gitops by flux is in place and secret encryption will be ready after sops setup.

I move on to update the cluster setup in the original gitops/homelab repository to start using the other gitops/homelab-sops repository.

---

apiVersion: source.toolkit.fluxcd.io/v1

kind: GitRepository

metadata:

name: homelab-sops

namespace: flux-system

spec:

interval: 1m0s

ref:

branch: main

secretRef:

name: deploy-token-homelab-sops

url: https://cp.blink-1x52.net/gitops/homelab-sops.git

---

apiVersion: kustomize.toolkit.fluxcd.io/v1

kind: Kustomization

metadata:

name: homelab-sops

namespace: flux-system

spec:

decryption:

provider: sops

secretRef:

name: sops-gpg

interval: 1m0s

path: ./clusters/homelab

prune: true

sourceRef:

kind: GitRepository

name: homelab-sops

Now everything is set. The homelab-sops flux kustomization fails until there is some manifest. I will add test secret.

# on gitops/homelab-sops repository

cd clusters/homelab

kubectl -n default create secret generic basic-auth \

--from-literal=user=admin \

--from-literal=password=change-me \

--dry-run=client \

-o yaml > basic-auth.yaml

sops --encrypt --in-place basic-auth.yaml

# see the secret manifest file basic-auth.yaml is now encrypted

# git commit and push

# wait for flux reconcilation

# confirm the status

flux get ks homelab-sops

# confirm the secret created

kubectl get secret basic-auth -n default -o jsonpath='{.data.user}' | base64 -d

calico manifest files¶

The network addon calico was installed manually, but I would like to place the manifest on the repository. I copied the two files I used and placed them in ./clusters/homelab/network-addon/.

node labels¶

I will be installing directpv later, and I will go ahead and label nodes with disks.

apiVersion: v1

kind: Node

metadata:

labels:

app.kubernetes.io/part-of: directpv

name: livaz2

kubernetes.io/hostname=livaz2

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/os=linux

node-role.kubernetes.io/control-plane=

kustomize.toolkit.fluxcd.io/name=flux-system

kustomize.toolkit.fluxcd.io/namespace=flux-system

app.kubernetes.io/part-of=directpv

beta.kubernetes.io/arch=amd64

directpv.min.io/node=livaz2

repository structure so far¶

.

|-clusters

| |-homelab

| | |-network-addon

| | | |-custom-resources.yaml # calico config file

| | | |-tigera-operator.yaml # calico installation file

| | | |-version.txt # text file with version of the installed calico

| | |-flux-system # installed by flux

| | | |-kustomization.yaml

| | | |-gotk-sync.yaml

| | | |-gotk-components.yaml

| | |-sops.yaml # flux gitrepo and kustomization for sops repo

| | |-nodes # node labels

| | | |-node-livaz2-label.yaml

| | | |-node-ak3v-label.yaml

| | | |-node-nb5-label.yaml

|-.git