building home lab part 4

Table of Content

building home lab part 4¶

In part 3 I installed kubernetes gateway v1.0.0, and NGINX Gateway Fabric to use the gateway.

In this part, I will setup DirectPV which will provide persistent volume, and I'll use the disk space there to run Minio S3 tenant. S3 API will be available through NGINX Gateway Fabric.

Krew is required to setup DirectPV, so I will start this part from there.

- [x] prepare nodes (VMs on hyper-v in this series)

- [x] setup kubernetes cluster

- [x] bootstrap flux gitops

- [x] setup sops for secret encryption

- [x] install helm

- [x] metallb to l2 advertise svc on LAN

- [x] gateway as well as nginx gateway fabric to setup https gateway

- [ ] directpv to setup storage class, to serve persistent volume

- [ ] minio as s3 storage

- [ ] gitlab runner to execute CI/CD jobs on my gitlab

- [ ] kube-prometheus for monitoring

- [ ] loki for logging

- [ ] cert manager to manage tls certificate

- [ ] weave gitops dashboard

- [ ] kube-dashboard

- [ ] postgresql

- [ ] mongodb

- [ ] bytebase

- [ ] my private apps

DirectPV¶

DirectPV is a CSI driver for Direct Attached Storage. In a simpler sense, it is a distributed persistent volume manager, and not a storage system like SAN or NAS.

directpv plugin installation¶

https://github.com/minio/directpv/blob/master/docs/installation.md#installation-of-release-binary

It has not been possible to version control things dealt using krew for a long time. You can install, upgrade, and uninstall plugins using krew but there is no way to specify version.

Instead, I download the binary directly from the repository so that I have control over which version of directpv to install.

# find the latest release

DIRECTPV_RELEASE=$(curl -sfL "https://api.github.com/repos/minio/directpv/releases/latest" | awk '/tag_name/ { print substr($2, 3, length($2)-4) }')

curl -fLo kubectl-directpv https://github.com/minio/directpv/releases/download/v${DIRECTPV_RELEASE}/kubectl-directpv_${DIRECTPV_RELEASE}_linux_amd64

chmod a+x kubectl-directpv

sudo mv kubectl-directpv /usr/local/bin/.

node labels¶

Installation of directpv to the cluster can be done on selected nodes as described in the document.

https://github.com/minio/directpv/blob/master/docs/installation.md#installing-on-selected-nodes

I know that for this cluster using VMs on hyper-v, I have added disk to vworker5 node, so I am going to add label to this node first.

apiVersion: v1

kind: Node

metadata:

labels:

app.kubernetes.io/part-of: directpv

name: vworker5

And the node will have this additional label set by flux reconcilation.

$ kubectl label --list nodes vworker5

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=vworker5

kubernetes.io/os=linux

kustomize.toolkit.fluxcd.io/name=flux-system

kustomize.toolkit.fluxcd.io/namespace=flux-system

app.kubernetes.io/part-of=directpv

beta.kubernetes.io/arch=amd64

directpv installation to the cluster¶

Now the installation can be done by simply running kubectl-directpv install --node-selector app.kubernetes.io/part-of=directpv, but I will try to prepare the manifest and place it on the gitops repository.

cd {gitops repo}/infrastructure/controllers/crds

# generate directpv installation manifest, v4.0.10 as time of this writing

kubectl-directpv install -o yaml > directpv-v${DIRECTPV_RELEASE}.yaml

This manifest will create/install a lot of things. The node-selector settings I need to add is on the daemonset.

$ grep ^kind directpv-v4.0.10.yaml

kind: Namespace

kind: ServiceAccount

kind: ClusterRole

kind: ClusterRoleBinding

kind: Role

kind: RoleBinding

kind: CustomResourceDefinition

kind: CustomResourceDefinition

kind: CustomResourceDefinition

kind: CustomResourceDefinition

kind: CSIDriver

kind: StorageClass

kind: DaemonSet

kind: Deployment

Here is the diff from the original. It's all fine with the default for VM on Hyper-V, but when you have rpi and other arm64 nodes in your cluster, you might need to change some of the images from minio to k8s registry supporting arm64 arch.

ref) https://github.com/minio/directpv/issues/592#issuecomment-1134143827

< # image: quay.io/minio/csi-node-driver-registrar@sha256:c805fdc166761218dc9478e7ac8e0ad0e42ad442269e75608823da3eb761e67e

< # https://github.com/kubernetes-csi/node-driver-registrar

< image: registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.10.0

---

> image: quay.io/minio/csi-node-driver-registrar@sha256:c805fdc166761218dc9478e7ac8e0ad0e42ad442269e75608823da3eb761e67e

1110,1112c1108

< # image: quay.io/minio/livenessprobe@sha256:f3bc9a84f149cd7362e4bd0ae8cd90b26ad020c2591bfe19e63ff97aacf806c3

< # https://github.com/kubernetes-csi/livenessprobe

< image: registry.k8s.io/sig-storage/livenessprobe:v2.12.0

---

> image: quay.io/minio/livenessprobe@sha256:f3bc9a84f149cd7362e4bd0ae8cd90b26ad020c2591bfe19e63ff97aacf806c3

And I update infra-controllers kustomization to include this directpv installation manifest.

diff --git a/infrastructure/controllers/kustomization.yaml b/infrastructure/controllers/kustomization.yaml

index 5b7303d..19e1fd8 100644

--- a/infrastructure/controllers/kustomization.yaml

+++ b/infrastructure/controllers/kustomization.yaml

@@ -3,6 +3,7 @@ kind: Kustomization

resources:

# CRDs

- crds/gateway-v1.0.0.yaml

+ - crds/directpv-v4.0.10.yaml

# infra-controllers

- sops.yaml

- metallb.yaml

Here is the result.

$ kubectl get ns

NAME STATUS AGE

calico-apiserver Active 6d19h

calico-system Active 6d19h

default Active 6d19h

directpv Active 2s

flux-system Active 5d3h

kube-node-lease Active 6d19h

kube-public Active 6d19h

kube-system Active 6d19h

metallb Active 43h

ngf Active 3h26m

tigera-operator Active 6d19h

$ kubectl get csidrivers

NAME ATTACHREQUIRED PODINFOONMOUNT STORAGECAPACITY TOKENREQUESTS REQUIRESREPUBLISH MODES AGE

csi.tigera.io true true false <unset> false Ephemeral 6d19h

directpv-min-io false true false <unset> false Persistent,Ephemeral 47s

$ kubectl get ds,deploy,rs -n directpv

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/node-server 1 1 1 1 1 app.kubernetes.io/part-of=directpv 12m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/controller 3/3 3 3 12m

NAME DESIRED CURRENT READY AGE

replicaset.apps/controller-697fb86954 3 3 3 12m

directpv drive setup¶

As mentioned, vworker5 is the node with additional disk added.

Get-VMHardDiskDrive -VMName vworker5 -ControllerNumber 2 | Get-VHD

ComputerName : GALAX

Path : F:\hyperv\vhd\directpv_A202FDB3-8523-4362-B37B-B91E37534395.avhd

VhdFormat : VHD

VhdType : Differencing

FileSize : 692224

Size : 322122547200

MinimumSize :

LogicalSectorSize : 512

PhysicalSectorSize : 512

BlockSize : 2097152

ParentPath : F:\hyperv\vhd\directpv.vhd

DiskIdentifier : BBA5F617-CC36-477C-97D2-10D8A99D0BDC

FragmentationPercentage :

Alignment : 1

Attached : True

DiskNumber :

IsPMEMCompatible : False

AddressAbstractionType : None

Number :

Running discover will discover this drive.

$ kubectl-directpv discover

Discovered node 'vworker5' ✔

┌─────────────────────┬──────────┬───────┬─────────┬────────────┬─────────────────────┬───────────┬─────────────┐

│ ID │ NODE │ DRIVE │ SIZE │ FILESYSTEM │ MAKE │ AVAILABLE │ DESCRIPTION │

├─────────────────────┼──────────┼───────┼─────────┼────────────┼─────────────────────┼───────────┼─────────────┤

│ 254:1$vYMMdKD/Uu... │ vworker5 │ dm-1 │ 980 MiB │ swap │ vworker5--vg-swap_1 │ YES │ - │

│ 8:16$VjQgpubVGwz... │ vworker5 │ sdb │ 300 GiB │ - │ Msft Virtual_Disk │ YES │ - │

└─────────────────────┴──────────┴───────┴─────────┴────────────┴─────────────────────┴───────────┴─────────────┘

Generated 'drives.yaml' successfully.

Edit the generated drives.yaml file so that the only item will be the disk you want to use as directpv drive. My drives.yaml looks like this.

version: v1

nodes:

- name: vworker5

drives:

- id: 8:16$VjQgpubVGwzTGtDrPk6q1XQHUEZ2hwssRETVTiE46pk=

name: sdb

size: 322122547200

make: Msft Virtual_Disk

select: "yes"

Finally, run init to configure the directpv drive.

$ kubectl-directpv init drives.yaml

ERROR Initializing the drives will permanently erase existing data. Please review carefully before performing this *DANGEROUS* operation and retry this command with --dangerous flag.

$ kubectl-directpv init drives.yaml --dangerous

███████████████████████████████████████████████████████████████████████████ 100%

Processed initialization request 'b490b74d-703f-4488-898e-21362626f7b9' for node 'vworker5' ✔

┌──────────────────────────────────────┬──────────┬───────┬─────────┐

│ REQUEST_ID │ NODE │ DRIVE │ MESSAGE │

├──────────────────────────────────────┼──────────┼───────┼─────────┤

│ b490b74d-703f-4488-898e-21362626f7b9 │ vworker5 │ sdb │ Success │

└──────────────────────────────────────┴──────────┴───────┴─────────┘

I am going to store this drives.yaml file as ./infrastructure/configs/directpv/drives.yaml just as a reference. It's not kubernetes manifest yaml file. I will not have flux process it.

usb ssd drive¶

# confirm the device

sudo fdisk -l

# create a partition anew, assuming the device is at /dev/sdb

sudo fdisk /dev/sdb

d # to delete existing partitions if any, repeat until there is none

n # to create a new partition

w # to write and exit fdisk program

# mkfs

sudo mkfs.ext4 /dev/sdb1

# then directpv discover should be able to find it, and init should be able to reformat this drive

Minio¶

MinIO is an object storage solution that provides an Amazon Web Services S3-compatible API and supports all core S3 features. MinIO is built to deploy anywhere - public or private cloud, baremetal infrastructure, orchestrated environments, and edge infrastructure.

minio operator deployment using helm¶

cd ./infrastructure/controllers

# get minio helm repository

helm repo add minio-operator https://operator.min.io

# check available charts and their version

helm search repo minio-operator

# create values file

# I'm skipping this as the default is fine to deploy minio-operator

### helm show values minio-operator/operator > minio-operator-values.yaml

I will again prepare shell script to generate minio-operator manifest like I did for metallb and ngf. I am creating both minio-operator and minio-tenant namespace here.

See the additional "gateway-available" label for minio-tenant namespace, where there will be console access and s3 service access through ngf.

#!/bin/bash

# create a namespace

cat >minio-operator.yaml <<EOF

---

apiVersion: v1

kind: Namespace

metadata:

name: minio-operator

labels:

service: minio

type: infrastructure

EOF

cat >>minio-operator.yaml <<EOF

---

apiVersion: v1

kind: Namespace

metadata:

name: minio-tenant

labels:

service: minio

type: infrastructure

gateway-available: yes

EOF

# add flux helmrepo

flux create source helm minio \

--url=https://operator.min.io \

--interval=1h0m0s \

--export >>minio-operator.yaml

# add flux helm release

flux create helmrelease minio-operator \

--interval=10m \

--target-namespace=minio-operator \

--source=HelmRepository/minio \

--chart=operator \

--chart-version=5.0.12 \

--export >>minio-operator.yaml

Generate ./infrastructure/controllers/minio-operator.yaml by running the script, and then update kustomization to include the minio operator manifest.

diff --git a/infrastructure/controllers/kustomization.yaml b/infrastructure/controllers/kustomization.yaml

index 19e1fd8..a3d2af2 100644

--- a/infrastructure/controllers/kustomization.yaml

+++ b/infrastructure/controllers/kustomization.yaml

@@ -8,3 +8,4 @@ resources:

- sops.yaml

- metallb.yaml

- ngf.yaml

+ - minio-operator.yaml

Here is the result.

$ flux get source helm minio

NAME REVISION SUSPENDED READY MESSAGE

minio sha256:a85473b4 False True stored artifact: revision 'sha256:a85473b4'

$ flux get hr minio-operator

NAME REVISION SUSPENDED READY MESSAGE

minio-operator 5.0.12 False True Helm install succeeded for release minio-operator/minio-operator-minio-operator.v1 with chart [email protected]

$ kubectl get all -n minio-operator

NAME READY STATUS RESTARTS AGE

pod/console-6d5fb84464-49764 1/1 Running 0 35s

pod/minio-operator-9d788785b-n244m 1/1 Running 0 35s

pod/minio-operator-9d788785b-zjhqs 1/1 Running 0 35s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/console ClusterIP 10.96.150.5 <none> 9090/TCP,9443/TCP 35s

service/operator ClusterIP 10.101.100.163 <none> 4221/TCP 35s

service/sts ClusterIP 10.99.18.177 <none> 4223/TCP 35s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/console 1/1 1 1 35s

deployment.apps/minio-operator 2/2 2 2 35s

NAME DESIRED CURRENT READY AGE

replicaset.apps/console-6d5fb84464 1 1 1 35s

replicaset.apps/minio-operator-9d788785b 2 2 2 35s

minio tenant deployment using helm¶

Since the chart is in the same helm repo added in the previous step, I will go ahead and prepare and edit values file.

Here is the diff to show the changes made.

- commented out credentials

- uncommented and rename secret ref to myminio-env-configuration

- changed server and volume count from 4 to 1

- changed volume size from 10G to 80G

- set storageClassName "directpv-min-io" which was created in the previous setup to setup directpv

- set requestAutoCert to false to run without TLS

19,21c19,21

< # name: myminio-env-configuration

< # accessKey: minio

< # secretKey: minio123

---

> name: myminio-env-configuration

> accessKey: minio

> secretKey: minio123

35,36c35,36

< existingSecret:

< name: myminio-env-configuration

---

> #existingSecret:

> # name: myminio-env-configuration

96c96

< - servers: 1

---

> - servers: 4

102c102

< volumesPerServer: 1

---

> volumesPerServer: 4

105c105

< size: 80Gi

---

> size: 10Gi

112c112

< storageClassName: directpv-min-io

---

> # storageClassName: standard

224c224

< requestAutoCert: false

---

> requestAutoCert: true

I need to prepare a secret to be used to login to the tenant to be created. Put this secret manifest on the other homelab-sops repository. Replace ROOTUSERNAME and ROOTUSERPASSWORD with whatever. Encrypt this manifest by running sops --encrypt -i myminio-env-configuration.yaml.

apiVersion: v1

kind: Secret

metadata:

name: myminio-env-configuration

namespace: minio-tenant

type: Opaque

stringData:

config.env: |

export MINIO_ROOT_USER=ROOTUSERNAME

export MINIO_ROOT_PASSWORD=ROOTUSERPASSWORD

And back to gitops/homelab repo. Here is the another script to generate manifest for minio tenant. This one will just cover the generation of minio-tenant helm release manifest.

#!/bin/bash

# add flux helm release

flux create helmrelease minio-tenant \

--interval=10m \

--target-namespace=minio-tenant \

--source=HelmRepository/minio \

--chart=tenant \

--chart-version=5.0.12 \

--values=minio-tenant-values.yaml \

--export >minio-tenant.yaml

And generate and include minio-tenant manifest.

diff --git a/infrastructure/controllers/kustomization.yaml b/infrastructure/controllers/kustomization.yaml

index a3d2af2..2cdb540 100644

--- a/infrastructure/controllers/kustomization.yaml

+++ b/infrastructure/controllers/kustomization.yaml

@@ -9,3 +9,4 @@ resources:

- metallb.yaml

- ngf.yaml

- minio-operator.yaml

+ - minio-tenant.yaml

Here is the result.

$ kubectl get all -n minio-tenant

NAME READY STATUS RESTARTS AGE

pod/myminio-pool-0-0 2/2 Running 0 16m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/minio ClusterIP 10.97.120.17 <none> 80/TCP 16m

service/myminio-console ClusterIP 10.105.76.58 <none> 9090/TCP 16m

service/myminio-hl ClusterIP None <none> 9000/TCP 16m

NAME READY AGE

statefulset.apps/myminio-pool-0 1/1 16m

Persistent disk space is available for the tenant, served by directpv.

$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

pvc-e719679a-8565-4d29-bfb9-ea37a1e9f07f 80Gi RWO Delete Bound minio-tenant/data0-myminio-pool-0-0 directpv-min-io <unset> 64m

$ kubectl get pvc -n minio-tenant

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

data0-myminio-pool-0-0 Bound pvc-e719679a-8565-4d29-bfb9-ea37a1e9f07f 80Gi RWO directpv-min-io <unset> 64m

$ kubectl-directpv list drives

┌──────────┬──────┬───────────────────┬─────────┬─────────┬─────────┬────────┐

│ NODE │ NAME │ MAKE │ SIZE │ FREE │ VOLUMES │ STATUS │

├──────────┼──────┼───────────────────┼─────────┼─────────┼─────────┼────────┤

│ vworker5 │ sdb │ Msft Virtual_Disk │ 300 GiB │ 220 GiB │ 1 │ Ready │

└──────────┴──────┴───────────────────┴─────────┴─────────┴─────────┴────────┘

$ kubectl-directpv list volumes

┌──────────────────────────────────────────┬──────────┬──────────┬───────┬──────────────────┬──────────────┬─────────┐

│ VOLUME │ CAPACITY │ NODE │ DRIVE │ PODNAME │ PODNAMESPACE │ STATUS │

├──────────────────────────────────────────┼──────────┼──────────┼───────┼──────────────────┼──────────────┼─────────┤

│ pvc-e719679a-8565-4d29-bfb9-ea37a1e9f07f │ 80 GiB │ vworker5 │ sdb │ myminio-pool-0-0 │ minio-tenant │ Bounded │

└──────────────────────────────────────────┴──────────┴──────────┴───────┴──────────────────┴──────────────┴─────────┘

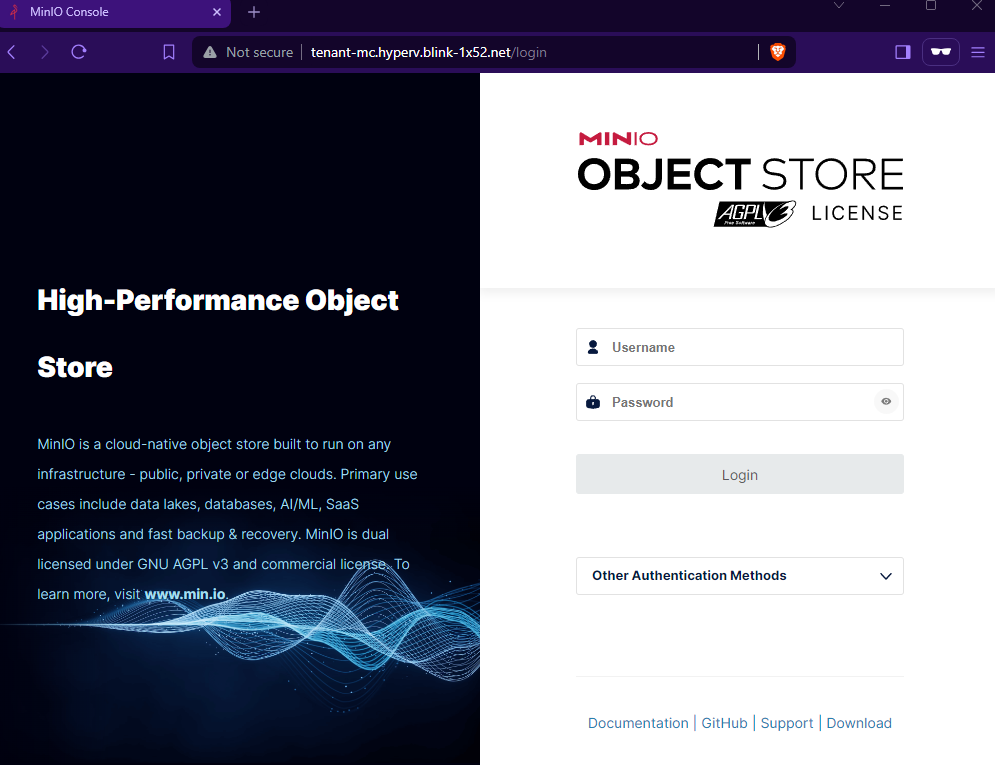

web access to the minio tenant console¶

Service "myminio-console" listening on port 9090 is the access to the minio tenant web console. I will use NGF setup in part 3 to make it accessible on LAN.

---

apiVersion: gateway.networking.k8s.io/v1beta1

kind: HTTPRoute

metadata:

name: minio-console

namespace: minio-tenant

spec:

parentRefs:

- name: gateway

sectionName: http

namespace: gateway

hostnames:

- "tenant-mc.hyperv.blink-1x52.net"

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: myminio-console

port: 9090

---

apiVersion: gateway.networking.k8s.io/v1beta1

kind: HTTPRoute

metadata:

name: minio-service

namespace: minio-tenant

spec:

parentRefs:

- name: gateway

sectionName: http

namespace: gateway

hostnames:

- "s3.hyperv.blink-1x52.net"

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: minio

port: 80

Update infra-config kustomize to include minio-tenant.yaml.

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- metallb-config.yaml

- gateway.yaml

- minio-tenant.yaml

And this will create httproutes.

$ kubectl get httproutes -n minio-tenant

NAME HOSTNAMES AGE

minio-console ["tenant-mc.hyperv.blink-1x52.net"] 5m51s

minio-service ["s3.hyperv.blink-1x52.net"] 5m51s

access to console¶

Plain http access to the console will be available now.

repository structure so far¶

.

|-clusters

| |-hyper-v

| | |-infrastructure.yaml

| | |-flux-system

| | | |-kustomization.yaml

| | | |-gotk-sync.yaml

| | | |-gotk-components.yaml

| | |-node-vworker5-labels.yaml # add label for directpv

|-infrastructure

| |-configs

| | |-kustomization.yaml

| | |-metallb-config.yaml

| | |-gateway.yaml

| | |-minio-tenant.yaml # httproutes for web console and s3 access on plain http

| | |-directpv

| | | |-drives.yaml # directpv yaml file stored as reference

| |-controllers

| | |-kustomization.yaml

| | |-minio-tenant-values.yaml # minio tenant helm values file

| | |-metallb.yaml

| | |-minio-tenant.sh # script to generate minio tenant helm release manifest

| | |-metallb.sh

| | |-minio-operator.yaml # minio operator manifest with namespaces, helmrepo, and helm release

| | |-default-values

| | | |-ngf-values.yaml

| | | |-metallb-values.yaml

| | | |-minio-tenant-values.yaml # default values file for minio tenant helm release

| | |-ngf-values.yaml

| | |-metallb-values.yaml

| | |-crds

| | | |-gateway-v1.0.0.yaml

| | | |-directpv-v4.0.10.yaml # directpv installation manifest file

| | |-minio-tenant.yaml # minio tenant manifest with helm release

| | |-sops.yaml

| | |-ngf.yaml

| | |-ngf.sh

| | |-minio-operator.sh # script to generate minio operator helmrepo, helm release, and namespaces minio-operator and minio-tenant

|-.git