Minio s3 setup

Table of Content

Minio s3 setup¶

MinIO is an object storage solution that provides an Amazon Web Services S3-compatible API and supports all core S3 features.

This is for my small homelab. I do not have many local disks to use nor intention to setup a great s3 service with perfect replication and backup.

I will be setting up what is good enough for personal use infrastructure.

Documentation¶

https://min.io/docs/minio/kubernetes/upstream/index.html

Deployment checklist¶

There are three checklists available to see how much your environment is aligned with the recommended setup: hardware, security, and software checklists.

https://min.io/docs/minio/kubernetes/upstream/operations/checklists.html

In terms of hardware, what I can afford for my personal homelab environment is limited, and I won't be preparing five times the size of disk space I would use for replication. This is going to be a minimal setup with no replication nor multiple disks redundancy.

Disks loaded on longhorn system¶

This is for my lab cluster. I have a bit more disks and volumes on my main cluster, but in this post I will work things out with what I have configured during the previous longhorn setup.

- lab-worker2 with 80GB disk

- lab-worker3 with 80GB disk

Note on changes on longhorn from the previous post¶

As a side note, I have made some minor changes to the longhorn setup, listed below, from what I covered in the previous post.

- add tag "xfs" to the two additional 80GB disks

- create storage class with disk selector, filesystem type, and reclaim policy customized to follow the recommendations below

- put all available disks back to ready state (they won't be used for this minio s3 setup though)

Additionally, MinIO recommends setting a reclaim policy of Retain for the PVC StorageClass. Where possible, configure the Storage Class, CSI, or other provisioner underlying the PV to format volumes as XFS to ensure best performance.

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: longhorn-xfs

parameters:

staleReplicaTimeout: "30"

diskSelector: xfs

fsType: xfs

provisioner: driver.longhorn.io

reclaimPolicy: Retain

volumeBindingMode: Immediate

allowVolumeExpansion: true

Minio installation¶

I will be installing minio-operator and minio-tenant using helm charts.

MinIO is a Kubernetes-native high performance object store with an S3-compatible API. The MinIO Kubernetes Operator supports deploying MinIO Tenants onto private and public cloud infrastructures (“Hybrid” Cloud).

The MinIO Operator installs a Custom Resource Definition (CRD) to support describing MinIO tenants as a Kubernetes object.

A MinIO Tenant is a fully isolated, S3-compatible object storage deployment managed by the MinIO Operator within a Kubernetes cluster. Each tenant includes its own set of MinIO pods, volumes, configurations, and access credentials, enabling multi-tenant architectures where different users or teams can operate independently. Tenants are designed for high performance, scalability, and security, supporting use cases ranging from cloud-native applications to data lakes and AI/ML workloads.

I will be installing minio-operator on infra-controllers flux kustomization and one minio-tenant for infrastructure on infra-configs flux kustomization. I will be naming this tenant "infra-tenant" assuming I may later spin up a separate tenant for apps. It is unlikely though, considering the size and the user count of the homelab kubernetes cluster.

minio-operator¶

I am going to start by installing minio-operator.

Add the repository and confirm the version to use.

# add the repository

helm repo add minio-operator https://operator.min.io

# update helm repository whenever needed

# helm repo update

# see the latest version

helm search repo minio-operator/operator

# do not use the legacy minio-operator chart

Copy the values file locally and edit.

# on gitops repo

cd ./infrastructure/lab-hlv3/controllers/default-values

helm show values --version 7.0.1 minio-operator/operator > minio-operator-7.0.1-values.yaml

cp minio-operator-7.0.1-values.yaml ../values/minio-operator-values.yaml

# edit minio-operator-values.yaml file

Once ready, generate flux helmrepo and helmrelease manifests. I use this script to generate the manifests whenever I make changes to the values file.

#!/bin/bash

# add flux helmrepo to the manifest

flux create source helm minio-operator \

--url=https://operator.min.io \

--interval=1h0m0s \

--export >../minio-operator.yaml

# add flux helm release to the manifest including the customized values.yaml file

flux create helmrelease minio-operator \

--interval=10m \

--target-namespace=minio-operator \

--source=HelmRepository/minio-operator \

--chart=operator \

--chart-version=7.0.1 \

--values=../values/minio-operator-values.yaml \

--export >>../minio-operator.yaml

And lastly, before adding the file to the flux infra-controllers kustomization, create the target namespace required, minio-operator. Once everything is set, push the changes and wait for reconciliation.

minio-operator values file¶

Here is the list of changes made to the values file:

- operator

- env

- CLUSTER_DOMAIN=lab.blink-1x52.net added

- replica count from 2 to 1

- env

flux tree ks infra-controllers¶

This is the HelmRelease portion of the kustomization.

# flux tree ks infra-controllers

├── HelmRelease/flux-system/minio-operator

│ ├── ServiceAccount/minio-operator/minio-operator

│ ├── CustomResourceDefinition/tenants.minio.min.io

│ ├── CustomResourceDefinition/policybindings.sts.min.io

│ ├── ClusterRole/minio-operator-role

│ ├── ClusterRoleBinding/minio-operator-binding

│ ├── Service/minio-operator/operator

│ ├── Service/minio-operator/sts

│ └── Deployment/minio-operator/minio-operator

minio-tenant¶

The installation procedure is going to be similar with the minio-operator installation.

The helm chart is included in the same repository previously added.

# add the repository

# helm repo add minio-operator https://operator.min.io

# update helm repository whenever needed

# helm repo update

# see the latest version

helm search repo minio-operator/tenant

Copy the values file locally and edit.

# on gitops repo

cd ./infrastructure/lab-hlv3/controllers/default-values

helm show values --version 7.0.1 minio-operator/tenant > minio-tenant-7.0.1-values.yaml

cp minio-tenant-7.0.1-values.yaml ../values/minio-tenant-values.yaml

# edit minio-tenant-values.yaml file

And once the file is ready, I run a slightly different script to generate the flux helm release manifest. Since the flux HelmRepository for minio-operator also contains the helm chart for the tenant, this script only creates HelmRelease. The script is placed at ./infrastructure/lab-hlv3/configs/scripts/minio-infra-tenant.sh, and the generated manifest which infra-configs flux kustomization will include will be at ./infrastructure/lab-hlv3/configs/minio-tenant/infra-tenant.yaml.

For each minio-tenant added, there will be following files:

- namespace

./clusters/lab-hlv3/namespaces/minio-TENANT_NAME.yaml- or... you could share one namespace for all tenants

- helm chart values file

./infrastructure/lab-hlv3/configs/values/minio-TENANT_NAME-values.yaml

- script to run

flux create helmreleaseto generate manifest./infrastructure/lab-hlv3/configs/scripts/minio-TENANT_NAME.sh

- generated flux helmrelease manifest file

./infrastructure/lab-hlv3/configs/minio-tenant/TENANT_NAME.yaml

#!/bin/bash

# add flux helm release to the manifest including the customized values.yaml file

flux create helmrelease minio-infra-tenant \

--interval=10m \

--target-namespace=minio-infra-tenant \

--source=HelmRepository/minio-operator \

--chart=tenant \

--chart-version=7.0.1 \

--values=../values/minio-infra-tenant-values.yaml \

--export >>../minio-tenant/infra-tenant.yaml

Minio-tenant values file for infra-tenant¶

Here is the list of changes made on the values file:

- tenant

- name: infra-tenant

- configuration

- name: infra-tenant-env-configuration

- configSecret: name: infra-tenant-env-configuration accessKey: # blank secretKey: # blank existingSecret: true

- pools:

- servers: 1

- volumesPerServer: 1

- size: 60Gi

- storageClassName: longhorn-xfs

- certificate:

- requestAutoCert: false

Preparing secret containing env config¶

Create a secret which looks like the example below, containing a key named "config.env" with environment variables. I place the file in the directory for sops flux kustomization and encrypt it using sops before pushing the change.

The values here are going to be the credentials to the tenant admin UI. Change the username and password when you implement one on your environment.

# ./sops/lab-hlv3/minio-tenant/infra-tenant-env-configuration.yaml

---

apiVersion: v1

kind: Secret

type: Opaque

metadata:

name: infra-tenant-env-configuration

namespace: minio-infra-tenant

stringData:

config.env: |-

export MINIO_ROOT_USER=minio

export MINIO_ROOT_PASSWORD=minio123

New storage class longhorn-xfs¶

I have already mentioned about this in the beginning, but I will add the new storage class "longhorn-xfs" for minio-tenants to use to get their persistent volumes provisioned.

# ./infrastructure/lab-hlv3/configs/longhorn/sc-longhorn-xfs.yaml

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: longhorn-xfs

parameters:

staleReplicaTimeout: "30"

diskSelector: xfs

fsType: xfs

provisioner: driver.longhorn.io

reclaimPolicy: Retain

volumeBindingMode: Immediate

allowVolumeExpansion: true

flux tree ks infra-configs¶

Here is the result after the installation.

$ flux tree ks infra-configs

Kustomization/flux-system/infra-configs

├── StorageClass/longhorn-xfs

└── HelmRelease/flux-system/infra-tenant

└── Tenant/minio-infra-tenant/infra-tenant

$ kubectl get all -n minio-infra-tenant

NAME READY STATUS RESTARTS AGE

pod/infra-tenant-pool-0-0 2/2 Running 2 (4h23m ago) 4h24m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/infra-tenant-console ClusterIP 10.96.6.195 <none> 9090/TCP 4h24m

service/infra-tenant-hl ClusterIP None <none> 9000/TCP 4h24m

service/minio ClusterIP 10.96.186.251 <none> 80/TCP 4h24m

NAME READY AGE

statefulset.apps/infra-tenant-pool-0 1/1 4h24m

Minio tenant UI access¶

I would like to next setup web UI access and it's easy three steps:

- identify the service to connect to

- add the new listener on the gateway

- add the new HTTPRoute to connect the gateway and service

The name of the service is infra-tenant-console.

Here is the listener added to the existing cilium gateway at .spec.listeners[].

- name: minio-infra-tenant-https

hostname: infra-tenant.lab.blink-1x52.net

port: 443

protocol: HTTPS

allowedRoutes:

namespaces:

from: Selector

selector:

matchLabels:

gateway: cilium

tls:

mode: Terminate

certificateRefs:

- name: tls-minio-infra-tenant

kind: Secret

namespace: gateway

And here is the HTTPRoute.

---

apiVersion: gateway.networking.k8s.io/v1beta1

kind: HTTPRoute

metadata:

name: minio-infra-tenant-https

namespace: minio-infra-tenant

spec:

parentRefs:

- name: cilium-gateway

sectionName: minio-infra-tenant-https

namespace: gateway

hostnames:

- "infra-tenant.lab.blink-1x52.net"

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: infra-tenant-console

port: 9090

Tenant S3 service access¶

I want to have the S3 service available through my cilium gateway as well, and so here it is.

This is the listener to be added to the existing cilium gateway.

- name: s3-infra-tenant-https

hostname: s3-infra-tenant.lab.blink-1x52.net

port: 443

protocol: HTTPS

allowedRoutes:

namespaces:

from: Selector

selector:

matchLabels:

gateway: cilium

tls:

mode: Terminate

certificateRefs:

- name: tls-s3-infra-tenant

kind: Secret

namespace: gateway

And this is the HTTPRoute.

---

apiVersion: gateway.networking.k8s.io/v1beta1

kind: HTTPRoute

metadata:

name: s3-infra-tenant-https

namespace: minio-infra-tenant

spec:

parentRefs:

- name: cilium-gateway

sectionName: s3-infra-tenant-https

namespace: gateway

hostnames:

- "s3-infra-tenant.lab.blink-1x52.net"

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: minio

port: 80

Enable GitLab Runner cache¶

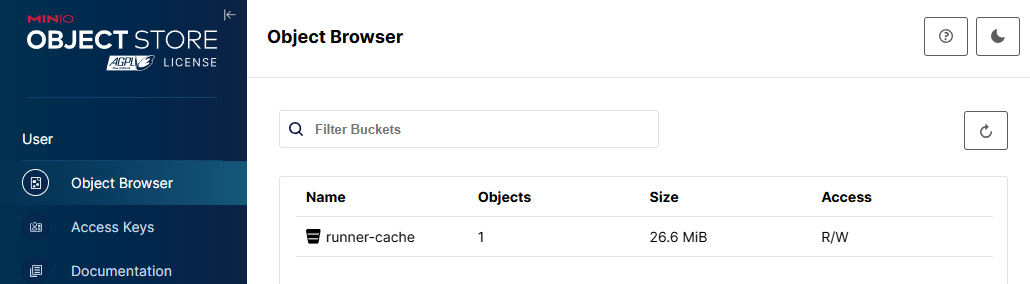

I have GitLab runner running that utilizes cache. I will create an S3 bucket for the runners to use the space for caching.

- Create a bucket named "runner-cache"

- Create a policy named "rw-runner-cache" that allows read-write access to the "runner-cache" bucket

- Create a user named "giltab-runner" and give it a password

- Set the "rw-runner-cache" policy to "gitlab-runner" user

The username and password are access key and secret key respectively.

Read-write policy limited to certain buckets¶

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": ["s3:*"],

"Resource": ["arn:aws:s3:::runner-cache/*", "arn:aws:s3:::runner-cache"]

}

]

}

GitLab Runner configuration file¶

My latest GitLab Runner is running as docker container. The configuration file is at /etc/gitlab-runner/config.toml.

I have added "Type = 's3'" in "runners.cache", and populated all the information to access minio s3 created inside "runners.cache.s3" section.

concurrent = 1

check_interval = 0

shutdown_timeout = 0

[session_server]

session_timeout = 1800

[[runners]]

name = "docker runner"

url = "https://GITLAB_HOST"

id = 14

token = "GITLAB_RUNNER_TOKEN"

token_obtained_at = 2025-04-04T06:04:33Z

token_expires_at = 0001-01-01T00:00:00Z

executor = "docker"

clone_url = "https://GITLAB_HOST"

[runners.cache]

MaxUploadedArchiveSize = 0

Type = "s3"

[runners.cache.s3]

ServerAddress = "s3-infra-tenant.lab.blink-1x52.net"

BucketName = "runner-cache"

BucketLocation = "lab-hlv3"

Insecure = false

AuthenticationType = "access-key"

AccessKey = "gitlab-runner"

SecretKey = "USER_PASSWORD"

[runners.cache.gcs]

[runners.cache.azure]

[runners.docker]

tls_verify = false

image = "alpine:latest"

privileged = false

disable_entrypoint_overwrite = false

oom_kill_disable = false

disable_cache = false

volumes = ["/cache"]

shm_size = 0

network_mtu = 0

Job log¶

Part of the GitLab runner job logs are as follow:

Saving cache for successful job 00:02

Creating cache xxx-protected...

/xxx/.cache/pip: found 568 matching artifact files and directories

Uploading cache.zip to https://s3-infra-tenant.lab.blink-1x52.net/runner-cache/runner/t1_CZUpzY/project/148/xxx-protected

Created cache

Uploading artifacts for successful job 00:01

Uploading artifacts...

public/: found 333 matching artifact files and directories

Uploading artifacts as "archive" to coordinator... 201 Created id=3647 responseStatus=201 Created token=xxx

Cleaning up project directory and file based variables 00:00

Job succeeded

And you can see it on the tenant UI.